Support for Intel GPUs

Message boards :

News :

Support for Intel GPUs

Message board moderation

Previous · 1 . . . 3 · 4 · 5 · 6

| Author | Message |

|---|---|

Bill F Bill FSend message Joined: 27 Sep 21 Posts: 12 Credit: 2,996,987 RAC: 2,707 |

Ok it's old and weak but qualifies as an Intel GPU ... First three tasks downloaded an one is running now. Thanks Bill F In October of 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

Yes, we only use integer ops here. Just a head up, someone may use leaked Engineering Sample (ES) version CPUs to run some BOINC tasks, which might not be producing reliable results. It would be better to require double-tasks validation, i.e. sending tasks to 2 computers and comparing results, if we want the result to be 100% good. And in the worst case, someone can explicitly attack this project by manipulating their local apps to send incorrect results... |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

|

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

Here we go: Thanks for sharing! The results themselves look good. My concern is the run times are much higher than expected. The Arc B580 should be similar to a 4060. My 3070 Ti averages about 10 minutes per WU, which should be comparable. Your run times are about 4 times larger than that. Any idea what could be causing this? Are you running multiple GPU threads simultaneously? Is the card at 100% utilization? It's possible Intel's openCL driver is not efficient, but I doubt that can account for a factor of 4 slow down. |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

Thunderbolt 3 eGPU enclosures (mine is: https://www.sonnettech.com/product/egpu-breakaway-box/overview.html) only support PCIe 3.0x4, which can be a reason causing the long run time. Also connecting the GPU via Thunderbolt may increase the latency between the CPU and the GPU. I don't currently have a desktop PC to test the card so I can't exclude these factors. Here is my AMD RX 6950 XT card's performance when running in the same eGPU enclosure: https://numberfields.asu.edu/NumberFields/result.php?resultid=246410785 Which wasn't very good neither. My laptop's CPU (i7 12700h) may be another reason because many gaming users of Arc B580 reported bad gaming performance on older CPU with the card, and they call it "CPU overhead issue". My laptop doesn't support enabling Resizable Bar feature for the external GPU, which is required by Intel Arc GPUs to get a optimal performance. In my observation, the GPU utilization was high enough (above 90% in average) when the NumberFields app is working. |

|

Send message Joined: 28 Oct 11 Posts: 181 Credit: 298,807,538 RAC: 232,800 |

Two memories from the early days of Intel on-die integrated GPUs (testing done on host 17234 and host 33342).Here we go:Thanks for sharing! 1) Accuracy: floating-point precision was reduced if the Intel OpenCL compiler was allowed to optimise the code with the "Fused multiply+add" opcode. This effect became more pronounced with the later and more powerful GPU models - the HD 530 showed it much more than the HD 4600. 2) Speed: the runtime support for the Intel OpenCL compiled code requires very little CPU - but boy, does it want it FAST! By default, BOINC will schedule one CPU task per CPU core, plus GPU tasks requiring less than 100% CPU utilisation. This over-commitment of the CPU causes a slowdown of up to 7-fold. There are three ways of mitigating this: a) reduce the number of CPU tasks running alongside the Intel GPU app. b) declare the Intel GPU app to require 100% CPU usage. c) Dangerous - use with care. Set the Intel GPU to run at REAL TIME priority, via a utility like Process Lasso. I experienced nothing worse than a momentary screen stutter once per task, but YMMV. References: 1) Private testing with a volunteer SETI@Hone developer - he built them, I broke them! 2) First reported on the Einstein@home message boards. |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

In my case, I didn't run any other CPU tasks when the GPU app is working. I still suspect the latency and bandwidth limits of the Thunderbolt 3 is the main cause. |

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

Although Thunderbolt 3 is much slower than PCI Express, I find it hard to believe it could be the problem. The total amount of data being transferred by the NumberFields app is relatively small, on the order of GBs; and Thunderbolt should have speeds in the tens of GBs per second. So if the Thunderbolt connection is working as advertised then it should only add a few seconds to the total run time. As Richard alluded to, problems like this are usually due to an over committed CPU. Recall, the NumberFields app uses a CPU core to buffer polynomials to be tested while the GPU works on a previous buffer of data. When the GPU finishes the current buffer, it hands the results back to the CPU and gets the next buffer - if the CPU doesn't have the next buffer ready then the GPU idles as it waits for it. To check this, I usually monitor the CPU during execution of a task to see how much it is being used. As an example, I just checked my 4070Ti and it is using about 50% of a cpu core - this is good, because it means the CPU is waiting half the time on the GPU. You want the CPU to wait on the GPU, not the other way around. You mentioned you have almost full GPU utilization, which usually means the GPU is not idle. However, I wonder if that is being reported correctly. Sometimes the GPU fan or temp readings give a better indicator if the card is actually being fully utilized. I find it odd that you are at full utilization with a single thread. On my 4070Ti I need to run 2 threads simultaneously, or else my utilization is only 50% (that's because the CPU can't feed the GPU fast enough, so it idles half the time). |

|

Send message Joined: 4 Jan 25 Posts: 20 Credit: 91,988,569 RAC: 556,752 |

In my case, I didn't run any other CPU tasks when the GPU app is working. I still suspect the latency and bandwidth limits of the Thunderbolt 3 is the main cause.Very unlikely IMHO- The fact that the application relies significantly on CPU support to feed the GPU means the faster the video card, the greater the impact of delays in getting the data from the CPU. However, the actual bandwidth being used between the CPU & the GPU is next to nothing. I have two i7-8700k systems, one has a GTX 1070 and a RTX 2060 Super. With NumberFileds set for 1 CPU + 1 NVidia GPU for GPU work, the bandwidth usage for the RTX 2060 Super is 2% (PCIe v3.0, x16) at 90-98% load (it's dropped from 99% steady with the current "chewier" Tasks), and the GTX 1070 it's 4% (5% peaks) (PCIe v3.0, x4) at 99% load (the GPU memory controller load is quite high- RTX 2060 Super is 50%, GTX 1070 is 65%). So any extra latency from the Thunderbolt 3 connection would have to be truely massive to have such a significant impact on processing times IMHO. The issue could be an example of the immaturity of the Battlemage drivers- there was a recent update for the Linux drivers, which resulted in some very significant improvements. eg eg It will be interesting to see when a Windows driver update comes out, if the new drivers address the compute issues as they did in Linux. It's very possible that the type of compute work NumberFields does is of the same type that is so hugely impacted by the driver issues. NB- i just wanted to say thankyou for bumping up the Credit per Task with this present series of Tasks going through. Even with their significantly increased processing time, the bump in Credit per Task appears to have the amount of Credit per hour being pretty much on par with what it was previously. So, thanks again. Grant Darwin NT, Australia. |

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. |

|

Send message Joined: 4 Jan 25 Posts: 20 Credit: 91,988,569 RAC: 556,752 |

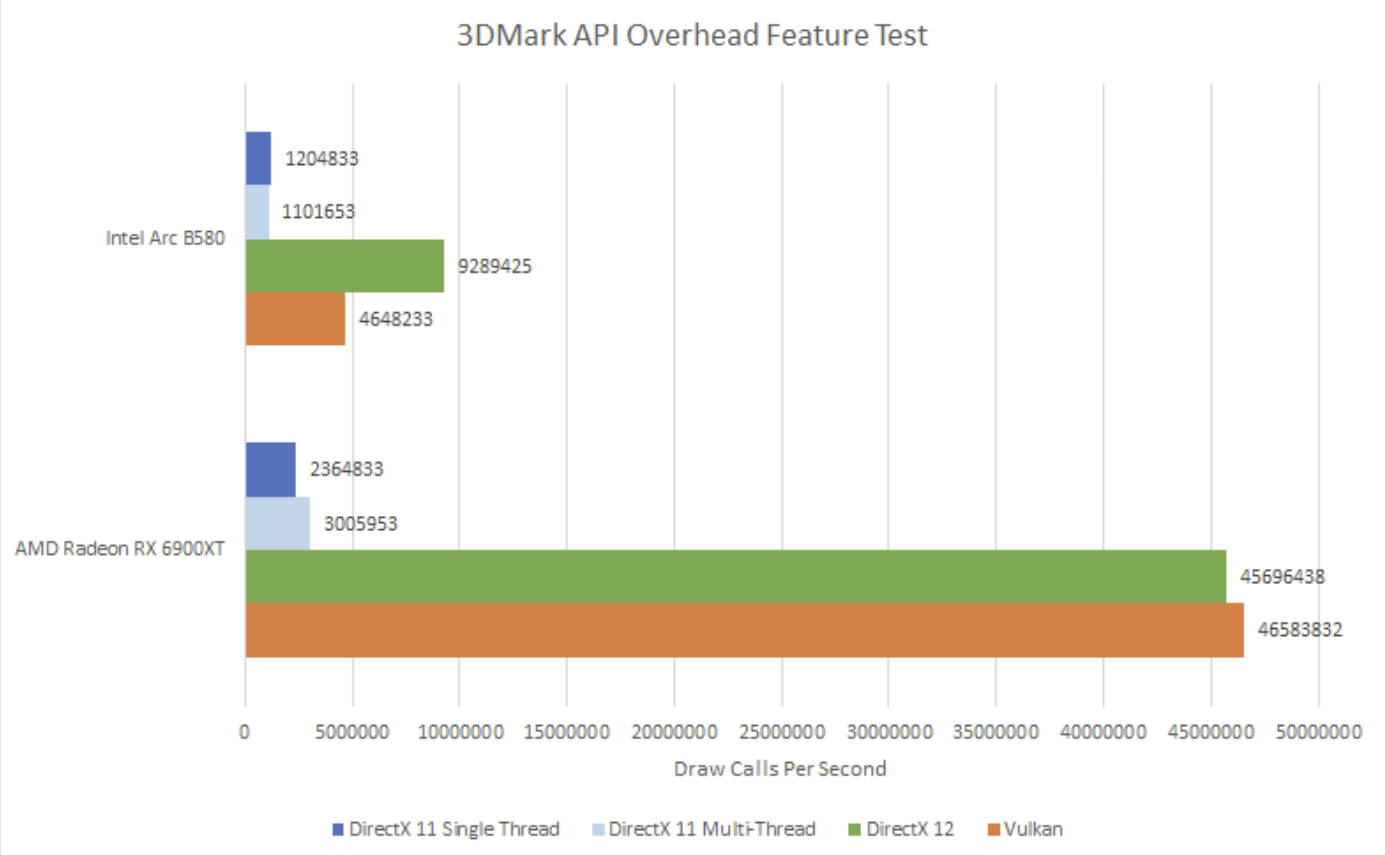

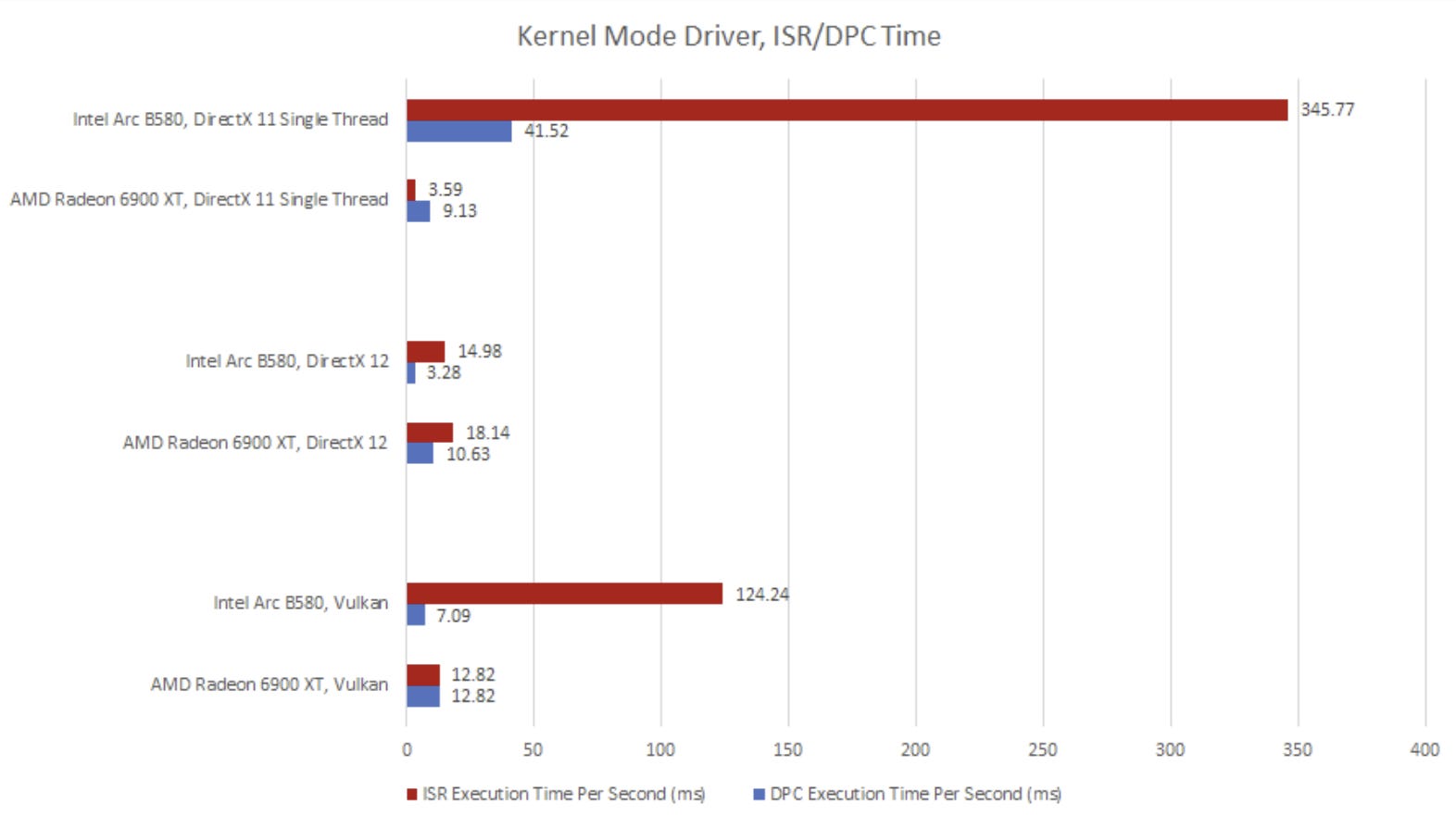

Lookes like a new driver was released for Windows on Jan 16th, unfortunately none of the fixes in the release notes mention compute performance. ZhiweiLiang might like to install the latest driver (if not already done), to see if there is any improvement in performance. Unfortunately it could be a while before there is any significant update to the drivers, as while the initial Windows gaming reviews showed very good performance of the B580, all of those tests were done with the latest & greatest CPUs. Being a more budget targeted card, when tested with older CPUs, it's been found that performance drops off, a lot. This is what Chips & Cheese found when they had a look at the issue- (sorry about the image sizes). Digging into Driver Overhead on Intel's B580   For most users – indeed, almost all users – the value proposition of the B580 isn't as strong as it initially appeared. Additional factors must now be considered. For example, to fully benefit from the B580, you'll need a relatively modern and reasonably powerful CPU. Anything slower than the Ryzen 5 5600, and the recommendation shifts firmly to a Radeon or GeForce GPU instead.Intel Arc B580 GPU Re-Review: Old PC vs New PC Test Grant Darwin NT, Australia. |

|

Send message Joined: 27 Dec 19 Posts: 7 Credit: 665,883 RAC: 0 |

I do not get email notifications for new responses. It is already set to notify immediately, by email. I use gmail. |

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

I do not get email notifications for new responses. That's odd, subscribing works for me. And your email address looks valid. I wonder if it's being blocked by the outgoing ASU mail server, or somewhere else along the way. |

|

Send message Joined: 4 Jan 25 Posts: 20 Credit: 91,988,569 RAC: 556,752 |

I use gmail.Nothing in your spam or bin folders? Grant Darwin NT, Australia. |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

Lookes like a new driver was released for Windows on Jan 16th, unfortunately none of the fixes in the release notes mention compute performance. |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. By the way, the NumberFields app takes up 5GB VRAM on Intel Arc B580. Is that normal? |

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. I don't think so. My 4070Ti only uses 522MB per thread. That's with default GPU settings. Did you happen to change the gpuLookupTable_v402.txt file? |

|

Send message Joined: 14 Mar 19 Posts: 20 Credit: 140,562,911 RAC: 2,607 |

Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. I didn't change it, and B580 doesn't appear in it. Any suggested change I can apply? Is numBlocks the number of blocks of VRAM available for the card? How to determine how large each block is? And threadsPerBlock is 32 for most cards? Will there be threadsPerBlock*numBlocks threads in one NumberFields app? I think I can try playing around with different values... |

Eric Driver Eric DriverSend message Joined: 8 Jul 11 Posts: 1388 Credit: 690,621,967 RAC: 828,674 |

Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. No, numBlocks is not directly related to VRAM. But the higher numBlocks is, the more GPU RAM will be needed. The GPU lookup table has been discussed before. See for example: https://numberfields.asu.edu/NumberFields/forum_thread.php?id=472&postid=2990#2990 To summarize, the threadsPerBlock is the number of threads that are run in lockstep. 32 works best for Nvidia cards; and unless I am mistaken, 64 worked best for AMD cards, at least on the one card I tested. I have no idea what the optimal value should be for intel cards, and it would depend on their GPU architecture. NumBlocks is not as critical, and can be increased until all of the available cores on the GPU are being utilized. To answer your last question, yes there will be threadsPerBlock*numBlocks threads running simultaneously within the GPU app. |

|

Send message Joined: 4 Jan 25 Posts: 20 Credit: 91,988,569 RAC: 556,752 |

It is rather high.I don't think so. My 4070Ti only uses 522MB per thread. That's with default GPU settings. Did you happen to change the gpuLookupTable_v402.txt file?Hey Grant - thanks for pointing out the issue with the intel drivers. I didn't realize they were that bad. Hopefully they will get updated soon. On a Windows system, all default settings (GPU was not found in the lookup table. Using default values:) and running one Task at a time, my RTX 2060 (6GB of VRAM), uses around 470MB. My RTX 2060 Super (8GB of VRAM), uses 603MB. My RTX 4070Ti Super (16GB VRAM), uses 1.3 to 1.5GB of RAM. I'm guessing the amount of VRAM in use is dependent on the number of Compute Units in use (or at the very least, the number of Compute Units the application thinks the video card has available; being a new architecture, the application defaults may not return an accurate result when querying the video card for what it's got available to use- which could explain the poor relative performance compared to the previous generation. The application has asked for what it's got available, but it mis-interprets the returned result, causing the card to be given more than it can actually handle, resulting in unexpectedly long processing times?). Grant Darwin NT, Australia. |

Copyright © 2025 Arizona State University